The New and Extended SONICOM HRTF Dataset

SONICOM researchers recently presented an extended version of the SONICOM Head-Related Transfer Function (HRTF) dataset at Forum Acusticum – Euronoise 2025 in Malaga, Spain.

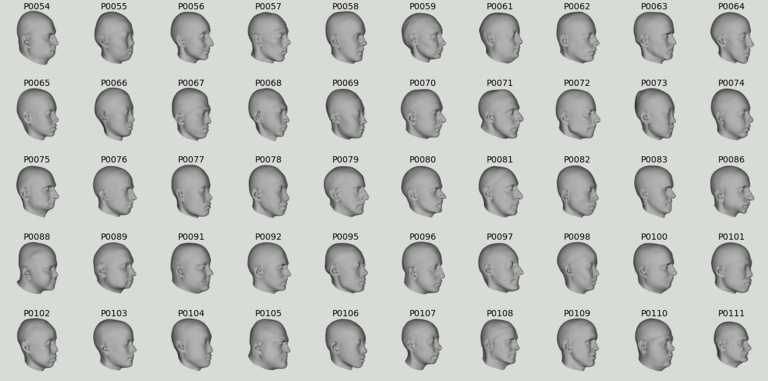

The extended version includes the addition of 100 more measured participants, incorporating associated demographics data, pre-processed 3D scans and HRTFs simulated using Mesh2HRTF, as well as the Spatial Audio Metrics (SAM) toolbox aimed at HRTF analysis and visualisation.

This extension not only enhances the dataset’s usability for spatial acoustics research but also paves the way for scalable, cost-effective HRTF personalisation in immersive applications.

“As machine learning and AI become increasingly central to making HRTF personalisation more accessible to everyday users, our goal with the extended SONICOM dataset is to support that shift. We’ve added more subject data, along with associated demographics and processed 3D scans for easier HRTF generation, to help meet the needs of data-hungry algorithms and enable more representative, transparent training datasets.

We also aimed to streamline the pipeline to link anatomical data to corresponding HRTFs and provide easier visualisation and analysis with the SAM toolbox. This allows researchers to iteratively refine and optimise HRTF synthesis and aid in understanding what aspects are essential for immersive spatial audio” – Dr Katarina Poole, Imperial College London

Dr Kat Poole presenting on the updated HRTF dataset at Forum Acousticum 2025

Building upon a widely-used resource

Launched in May 2023, the SONICOM HRTF dataset is one of the largest HRTF datasets available and includes 200 measured HRTFs along with photogrammetry data and high-resolution 3D scans of the heads of the subjects. The dataset has been cited in 32 different studies at the time of writing and currently represents one of the more widely used publicly available HRTF datasets.

Despite its contributions to the field, like many existing HRTF datasets, the SONICOM one faces limitations that reduce its applicability in modern spatial audio research, including:

- The amount of available data remains insufficient to effectively train and validate deep learning models;

- Acoustically measured datasets can be subject to inaccuracies due to movement and inherent noise within the equipment. This can make aggregating data across HRTF datasets non-trivial.

To address these limitations, this new work extends the SONICOM HRTF dataset with additional subjects (327 as of writing), and introduces a large set of synthetic HRTFs generated from the processed 3D scans using Mesh2HRTF (200 participants). Synthetic HRTFs aim to mitigate against these by simulating HRTFs from a scan, removing subject and experimenter error as well as differences in recording apparatus.

Additionally, the Spatial Audio Metrics toolbox has been developed and released to facilitate standardised HRTF evaluation and visualisation. By leveraging computational HRTF generation, this dataset significantly expands the potential for machine-learning applications, spatial audio research, and personalised HRTF synthesis.

Future work will focus on increasing the number of participants and processed scans, as well as testing the perceptual efficacy of the synthetic HRTFs within this dataset in comparison to measured HRTFs.

Access the extended SONICOM HRTF dataset here.